On October 15 2015 I attended a one day conventionette called Lost Patrons of the Mother Black Cap. It was hosted by Dave Forrest’s Full52, and was honestly the best magic event I’ve ever been to.

During that convention I saw Dave Forrest perform one of his signature effects REM. In REM a bunch of decisions are made by a spectator leaving an arrangment of items on the table, which is then revealed to match a photograph. When you buy the trick, you get a method and a photograph.

But when Dave Forrest performed it at the Lost Patrons of the Mother Black Cap, he had one extra prop which you don’t get with the effect as sold. That prop was a 2 foot by 3 foot painting of himself with the props arranged in front of him.

It was, after all, his signature effect and so while all the hobbyists bought it as trick number 237 to half heartedly perform in front of bored colleagues, Dave was closing his cabaret shows with it, and the painting was something so permanent, so hard to fake, that it left no doubt in the minds of his spectators that this prediction was as good as set in stone.

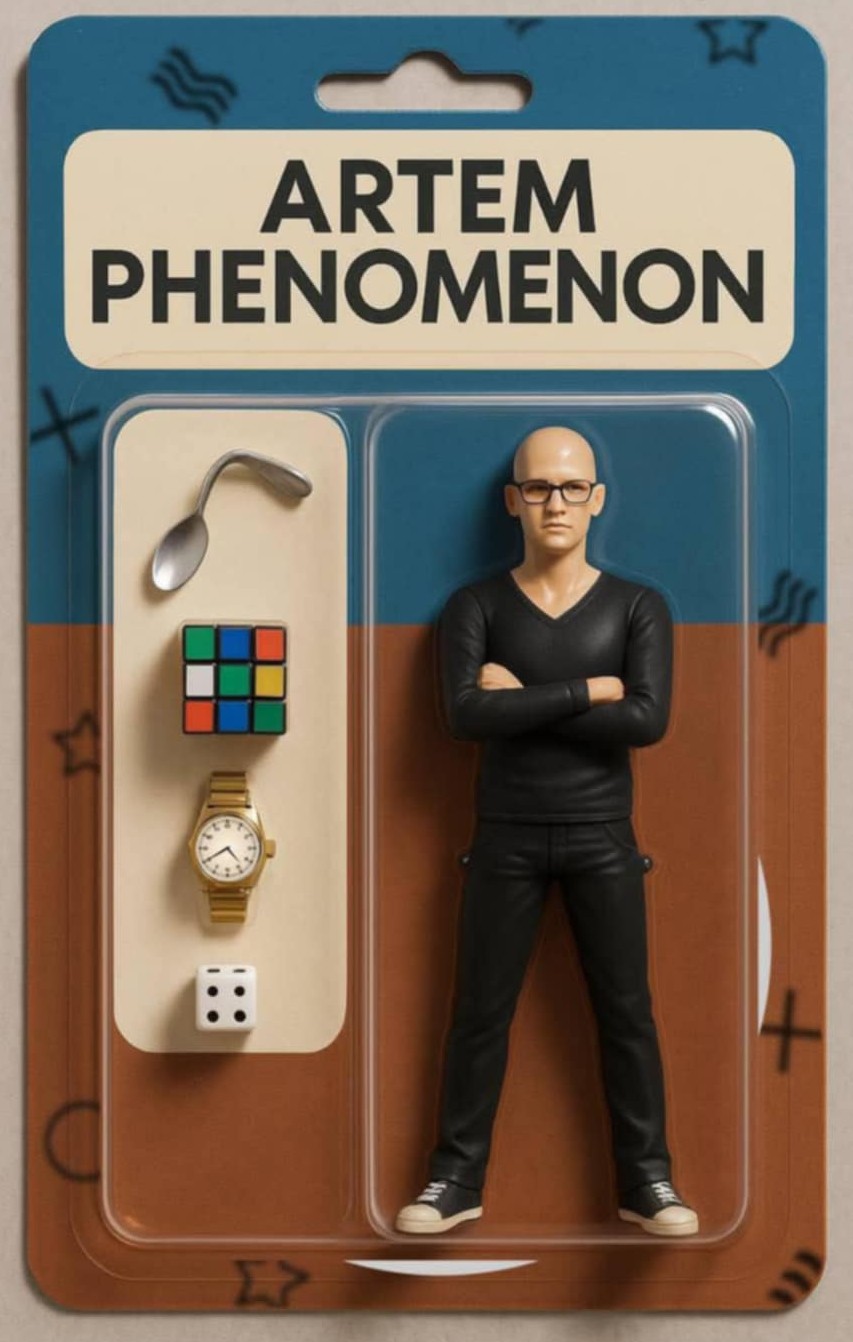

But now… I’m seeing a lot of these…

The purpose of this is the same as that of Dave Forrest’s oil painting, the dice, watch, and rubik cube are in fact reveals of a matching cube mix, clock face, and dice roll in the performance, the difference being that magicians are putting things like this on their phones to show during walk around and it all just feels a bit throwaway. But then the art is throwaway, someone typed some words into a website and this just happened. I have zero worries about this magician finding my blog and trying to do a copyright takedown on it because you cannot copyright AI generated images¹.

Briefly ignoring the impermanence of the image residing on a phone, these prompt driven generative image creators can ape stylistic forms such as oil paintings, watercolours, or anime frames². They can even mimic the styles of specific artists. If you bought Dave Forrest’s REM today you could take a photograph of yourself with the final arrangement of props, feed it into an image styliser and get that image printed onto a large framed canvas for about the cost of a decent peek wallet.

I’ve spoken before about low effort products being released cobbled together with AI art such as the Real Tarot Deck from David Regal. At the time I was on the fence about it being AI art or not, but then his next product Ha-bracadabra is clearly a book full of low effort AI slop with added joke captions.

In that earlier post I also mentioned that Alakazam was at least partially generating its newsletters, but I’ve since realised that pretty much every product since Jokerz has had neural network generated art on the packaging. They also use it extensively in their promotional images.

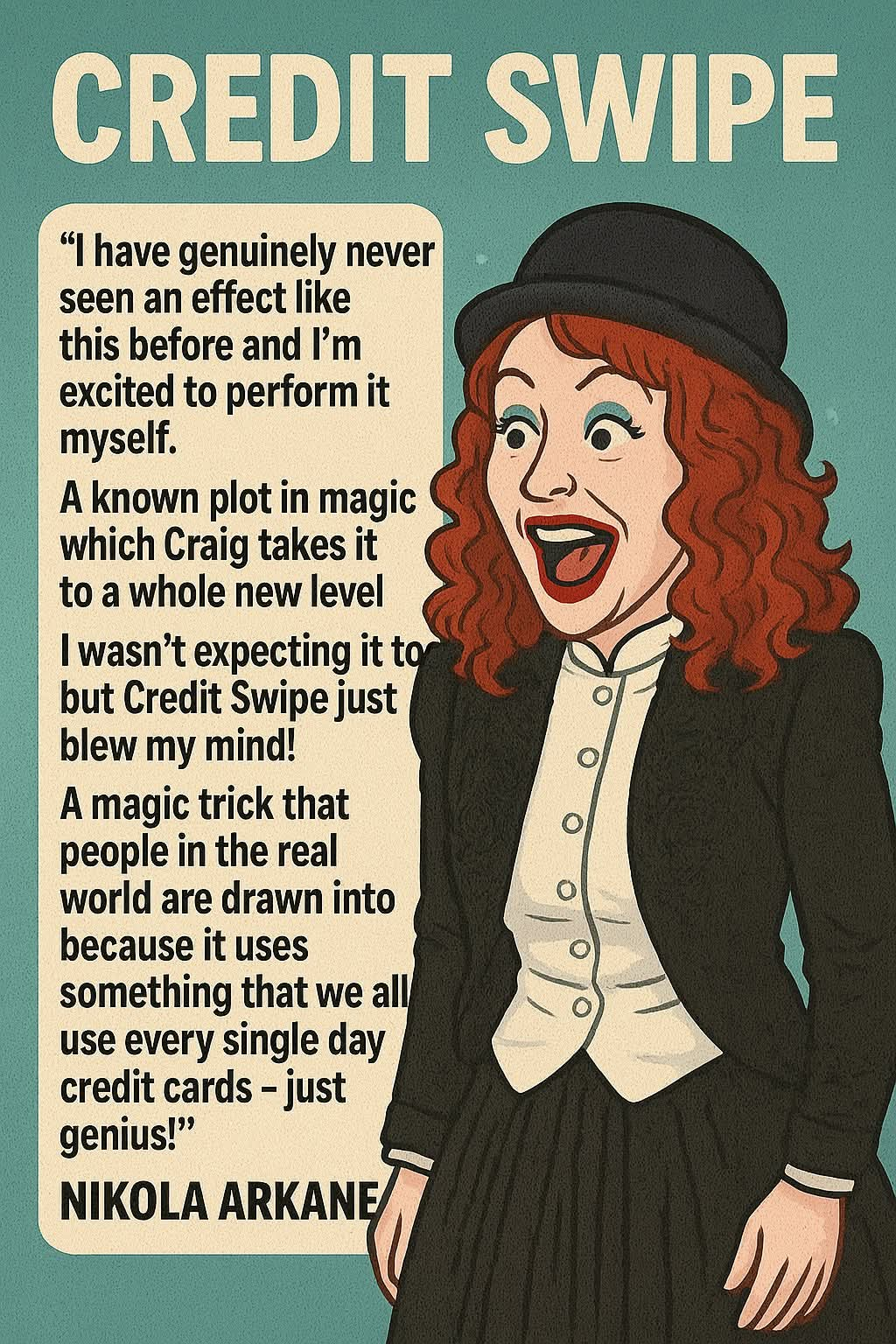

The trouble is that as these image generators get more powerful, it is harder and harder to spot their output. In a bizarre way the easiest way to identify someone switching to AI art is a sudden improvement in the quality of their graphics, such as the MagicTV thumbnails from Craig Petty now all having cartoon characters. Craig is also putting out promotional images with AI stylised pictures of famous magicians alongside their quotes about his work.

I mean look at this:

Nikola Arkane is way prettier than that³.

Recently someone prompted up a bunch of disney style portraits of the entire Magic Circle council, and most of them now use these as their social media avatars.

It really does feel like we have lost the war to automation. Magicians on the whole seem to lack the same empathy that other creatives have for their fellow artists. Those in other creative fields have taken a stance against AI because they recognise that their field may be next. As I have extensively written before however: magic is not an art but rather an industry, and industries have long ago been severed from the divine light of creation.

How did we get here?

The more I think about it the more I think this whole thing started with women laughing at salads.

I probably need to explain that in more detail.

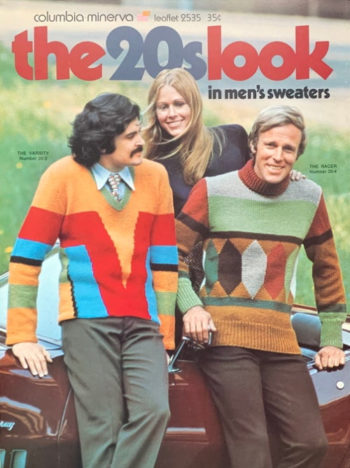

There was a time when marketing departments in companies would have entire teams of graphic designers and photographers to put together glitzy promotional images like this:

Believe it or not, every aspect of that image was meticulously planned, from the location to the models, the outfits to the lighting, probably even things like the focal length and framing. They would have snapped a whole film reel of pictures, manually colour graded them from the negatives, and selected the one best shot.

But then what happened was that this stuff got outsourced and the photography companies realised that lots of people were less specific about what they wanted. As such they could identify themes in commonly requested images to fill the pages and pages of adverts in fashion magazines, and stock photography became a thing. This is where we get women laughing at salads.

Stock photography took images from being a complex part of the creative process to being simply context free products to pad out content. In many ways it was like clip art but clip art was easy to spot, each piece was individually drawn and since they often shipped with the authoring software, everyone recognised when they were used. It had a similar effect to comic sans, using clip art could reduce marketing ephemera to the same cultural context as a photocopied invite to a child’s birthday party or flyer for a staff outing to a brewery pinned to a notice board.

Stock photography could be produced in copious quantities just by taking photo after photo with the same people and setting. Since the dawn of digital cameras we don’t even have to worry about running out of film. There’s so much stock photography now that we don’t recognise it⁵.

This ability to pick from a list of thousands of near identical images to just drop into a document has done something to our notion of graphic production.

I’ve spent a lot of time designing things like the logo on this website and various other projects I’ve worked on. The photo I’ve put it with was taken by an event photographer, and used with permission from a guy called Andrew Merritt. I contracted an artist to make a drawn version of it. Didn’t really like the end result, still paid them £300. This is approximately the same amount of money I paid to get a bespoke piece of music I use in my linking rings routine.

I also paid a graphic artist £150 to rework my logo. Didn’t like the result, preferred my own. Still paid him. This isn’t because I’m rich, if I was I’d have gone through more iterations on those things, maybe even had a special photoshoot rather than picking a photo from a freebie cabaret show I got in on.

Art, photography, graphics, music, all have a value measured against the exchange rate of human labour and creative spirit.

For the longst time there was a line between the bespoke and the generic. If you picked from a list you got a woman laughing at salad, of you wanted it to be you laughing at the salad, or laughing at a specific salad, you had to put in the effort to make it yourself, or pay someone to do the same.

AI has come along and squared that circle. It has the convenience of the stock photo but with the bespoke customisation of creating from scratch. Now you can have a photo of yourself laughing at a salad on the back of a rainbow coloured elephant galloping over an ocean of robot prawns⁶ for less than the cost of a McRib meal.

If you recall an earlier post on here I mentioned using ChatGPT to fill out a book with garbage text, simply so that I would have a contextual wrapper around a drawing I showed in a performance. The drawing itself I paid an artist to create and the text was ultimately just padding. It could have been lorem ipsum but I thought it might be fun for the pages to read like diary entries of a paranormal investigator if the volunteer actually opened it to a random page. They didn’t, honestly I could have just filled it with garbage and no one would have noticed.

But one thing I have never talked about is that while prompting for the entries of this diary, I asked for things like instructions on how to build a ghost trap, a list of paranormal sightings, descriptions of a mushroom shaped cryptid, and most importantly a catalogue list of 50 haunted objects.

The reason that last one is important is that it was the output which made me the most uneasy, because it bore a startling resemblence to a twitter thread from years earlier in which comedian and writer Guy Kelly added a description of a different haunted item every couple of days. I can’t find that thread anymore because twitter is a swamp, but I can’t guarantee that any of them were not just wholesale copies of the creative writing in that thread, and that was possibly the most disturbing part of it all.

Where do we go from here?

When AI image, voice and video generation was still a broken little toy, you could vary easily recognise where it was being used. Seriously, just look at the cover art for Jokerz. Just zoom in on that face and tell me it was ever graced by the loving care of a human hand. This isn’t the same as making mistakes. Humans make mistakes, this is more like the aesthetic equivalent of quantisation noise in the details. For a while this kind of crud in the details, poor lipsync, monotone voice lines, and frankly just the most fucked up hands youve ever seen used to be identifiers by which we could sort serious products from scams and grifts. Scams would always be done on the cheap; kickstarters for products that will never exist, low quality knockoffs, and overpromising gadgets⁷.

But now we’re at the stage where the machines are making near flawless reproductions of human art styles, so its not quite so easy to say whether a picture or voice is the product of generative AI or not. I’ve seen artists accused of using AI to produce things they definitely drew from scratch but at the same time I’ve seen people insist they drew something which was clearly generated by a machine. There is even an algorithm now for taking a piece of AI generated art and deconstructing it back down through the stages which would have been used to draw it, so that you can play the result in reverse and it looks like a step by step of you hand drawing a picture which came out of a computer. We live in a post truth reality. Earlier this week my wife accused a human caller of using a computer generated voice on a soundboard over the phone. I mean he was actually a scammer, but the slick perfect tones of a recorded voice had become a defacto signifier of a scam call, so it briefly threw us when he responded like a human being.

I think this is what it boils down to – low effort shovelware, once identified by its lack of quality, now looks as perfectly polished as a soulless corporate high end product. The true mark of something with inherent worth is clear signs of human involvement, and if that means art that looks kind of amateurish, the occasional typo and misused grammar, so be it.

I was going to post this earlier but I held off, and I’m glad I did because today I was looking at Lybrary as I often do and so many of the ebooks had AI generated covers. They had that distinctly computer generated too perfect look, and if the covers are AI slop maybe the contents are too. Who knows? Maybe, just maybe, one or two of them were actually drawn by a person capable of drawing that well, but at this point I would rather buy a magic instructional manuscript with an awkward photo of the author, a terrible hand drawn cartoon, or Microsoft Word Art on the cover, than one which looks suspiciously too good for a small independent author.

To err is human, viva la human revolution!

¹ There has recently been the suggestion that this may change if enough people throw enough money at enough lobbyists to upend the entire system of incentivised creativity to make all art free game for AI training but the output of said training belongs to licensees, meaning that any kind of intellectual property rights are simply an asset to be bought, generated by tech companies by wasting vast amounts of compute power. Like NFTs but somehow taking over all human culture.

² Much to the chagrin of Hayao Miyazaki who said that even using hand written algorithms to animate human designed 3D models was an insult to life itself. I don’t know what he’d think about automatically trained data models churning out images from whole cloth with no human involvement, but my guess is that he wouldn’t like it.

³ Can I footnote a microreview opinion of the effect? I don’t see why not. Craig sells this as a totally new idea in magic but people have been doing magic with credit cards for years and the effect is essentially a restyled version of Daryl’s Presto Printo. It’s pitched as an oganic EDC because eveyone carries credit cards, but you’ll need to fit 10 fake credit cards into your wallet to perform this. I keep about 10 actual cards (credit, debit, ID, and loyalty) in my wallet and it’s already full. 2/10

⁴ And also the reason why if you’re seeking a career as a model, you should never be photographed drinking a glass of apple juice. Cannier souls than you have unknowingly become figure heads of the vibrant and diverse piss drinking community.

⁵ Okay, so I’m not going to pretend my readers are idiots. some stock photography is recogniseable. Hide-the-pain Harold and the Distracted Boyfriend meme have pretty much ruined the stock photography careers of all involved, simply because as the images become recogniseable they carry a context with them and no longer work as stock photogrpahy, because they no longer see a mid 20s white professional couple, they see The Distracted Boyfriend.

⁶ I’m not high enough to make up something more chaotic than that – not that I’m one to participate in recreational pharmaceuticals. If you want to really tap your third eye, contract bacterial endocarditis and spend six weeks isolated on high strength antibiotics.

⁷ There’s one doing the rounds at the moment for a robot dog which uses a combination of low quality videos of actual dogs alongside ai generated videos of said dogs being built in a factory. Again, the people making these are just using footage like lego bricks to build a vague narrative, and like lego bricks you can tell by looking at the smooth surfaces and inappropriate sharp corners that it’s not the real thing. For now.