This entry is tangentially related to David Regal’s new tarot deck. But not entirely. Perhaps not even legitimately. I currently have a question pending on the Vanishing Inc. website which may prove that my fears are unfounded. This time.

Indeed the last time I was super concerned about a technological innovation it was NFTs and despite my fears only one magician ever released an NFT project to my knowledge, and it was so hilariously bad that he sold none of them and pivoted to passive income training course scams.

But with the launch of Phill Smiths Fusion Mosaic Phenomenon and Marc Kerstein’s Subliminal the dawn of the AI generated magic product has truly begun.

How Did We Get Here?

Stable Diffusion is (at the time of writing) the newest in a line of computational neural network techniques for randomly generating images based on text prompts. This effort began as a side product of image recognition systems where the algorithm was run backwards to show what specific structures it was recognizing when looking for specific objects.

Over time this led to Generative Adversarial Networks, or GANs. Adversarial neural networks are intended to be self-training, where one neural network attempts to fake the images the other is looking for. The recognition algorithm is fed a mix of genuine photos of things and the output of the fake images and it is scored on its ability to discern real from fake, and the generative algorithm is scored on its ability to fool the recognition algorithm, and the scores are used to measure the success of tiny changes to the weights and biases in the algorithm.

At that point in time the best application was the production of fake faces purely for experimental purposes¹.

Then a similar technique was used to enhance photos. A neural network is given a blurry or noisy image and told to enhance the original details. It’s score is measured based on accuracy to the original image, and this score is used to update the algorithm. Over thousands of generations this process improves to the point where you can literally pass in raw noise, tell it what the image is meant to be and it will use that prompt to reverse the noise into a randomly assembled image of the thing it is told to see.

The latest fad at the time of writing is illusion diffusion, seen in the two magic tricks listed above, where the noise is pre-biased with light and dark areas or a different image, producing a generated image of one thing which contains within it a symbolic representation of another.

This is my dog but diffused as an image of a field of sheep.

The Sticking Point

This probably sounds like an amazing system with no drawbacks as a way to compose photographs meeting impossible criteria without this system. How could it possibly be misused or in any way bad?

Well the problem is, whilst these systems allow relatively straightforward generation of otherwise impossible² images, they also enable the rapid and simple generation of straightforward images, the kind that would have once been bought from a stock image library or commissioned as a custom photoshoot. Automating away a segment of the economy.

Additionally there has now been considerable effort to generate non-photorealistic images, such as cartoons, paintings, pencil sketches, woodcuts and the like. So that’s work for a huge creative industry being automated away. Much like the automation of factories, this was sold as a dream of productivity without effort. Regen machines can do the work for you, you can work fewer hours and have more leisure time… Except just like every other point in history the benefits of this productivity go straight to the capitalists, and the displaced workers are simply let go in order to fend for themselves despite having spent their entire life developing a set of skills which no one is willing to pay for anymore. Somehow, astoundingly, that’s not even the worst part.

Remember how I said the neural networks were trained by feeding them a mixture of real images and corrupted or fake images? Early GANs used large expensive datasets of painstakingly labelled photographs, which were produced and curated for that purpose, but as the technology demanded larger and larger quantities of training data done brought spark realised that the internet is full of images, many of which had descriptive alt-text captions for accessibility. Hundreds of thousands of photos are uploaded to Instagram with descriptive captions every hour. So they just started scraping as many images as possible. This included the works of millions of artists with portfolios on sites like artstation, deviantart, webtoon and others. This is so pervasive that many popular artists names can be used in prompts to generate images in their style, and so can the phrase “trending on artstation” which tells the algorithm to specifically use the values trained from the top rated images on that site.

So not only is this technology putting highly skilled independent artists out of business, it’s built on their own work, which it used without their permission. The artists therefore are understandably quite pissed off.

The Trial of David Regal

So Subliminal and Fusion Mosaic Phenomenon are effects which could not realistically exist without this technology³. But that’s not really the primary use case for image generation. The reason big companies are throwing so much money behind developing this tech is to cut out skilled workers and reduce business costs in creative industries.

There was a large outcry when Disney, arguably one of the largest animation studios in the world, used a generative AI to animate the title sequence of their Secret Invasion miniseries, and a central concern of the most recent American writers strike was that of their work being used to train their AI replacements.

Which brings us back to the question looming over The Real Life Tarot Deck by David Regal. A Tarot Deck contains 78 cards, each of which has a bespoke illustration. Stasia Burrington, the artist who works with The Jerx, sells a custom tarot deck for £60. A standard Rider Waite tarot deck, which the illustrations on Regals Deck imitate and has the benefit of age⁴, ubiquity and economies of scale involved in high volume mass manufacture, costs £15.

David Regals deck, a low volume production with entirely new illustrations for every card and sold to a niche audience of magicians who have enough interest in tarot to add these elements into a performance while not actually caring enough about tarot to consider a joke tarot deck quite crass, costs the paltry sum of £20.

That’s not enough money for a production of that scale, and magicians aren’t known for underselling their wares. Cube52, for comparison costs £30, and those cards are smaller, there are only 54 of them, and they have blank monochrome faces, so no artwork costs associated. Perhaps the extra £10 is for those 9 hours of instruction, but drawing 78 custom illustrations takes way longer than 9 hours. Clearly something has to give.

“But we can’t know for certain that this product was produced with generated images.”

Arguments for the Prosecution

The cost alone raises questions but proves very little. This may be a passion project by an artist with a wicked sense of humour, but if that was the case who is the artist? Tarot decks the world over, from the classic Rider Waite to modern bespoke decks, announce the names of their artists with pride, often as the main selling point. The only names associated with this deck are David Regal and tangentially Ed Marlo, whose ace cutting routine is included as part of the product.

I did wonder if perhaps David Regal himself had drawn these cards, but David Regal’s website has his bio listed as “Magician, Writer, Jew” and the gallery page is entirely photographs, almost all of them David being strangled by various celebrities. No illustration work to speak of.

So even if this artwork was human produced, it was done so by an underpaid, uncredited artist. Is there any smoking gun for AI though?

I’m going to argue yes. There are visual hallmarks of diffusion generated art, all of which appear in the cards of this set.

Detail Mashing

Detail mashing is a common feature of AI art generation attempting to produce a surface with a fine texture or pattern. Even a poor artist asked to draw foliage or a field of wheat will still tend towards regular patterns of individual details.

An AI on the other hand will blend fine detail together in an almost organic way, even when the object being drawn is constrained to a rigid mechanical.or architectural structure.

The wheat in particular here has this blobby detail merging.

Malformed anatomy

One of the oldest complaints about AI art is that since it is randomly generating the figures in its images, the poses are also random, which often leads to them being posed oddly to the point of deformation. Ask an AI to generate a picture of a handshake and you’ll see the issue.

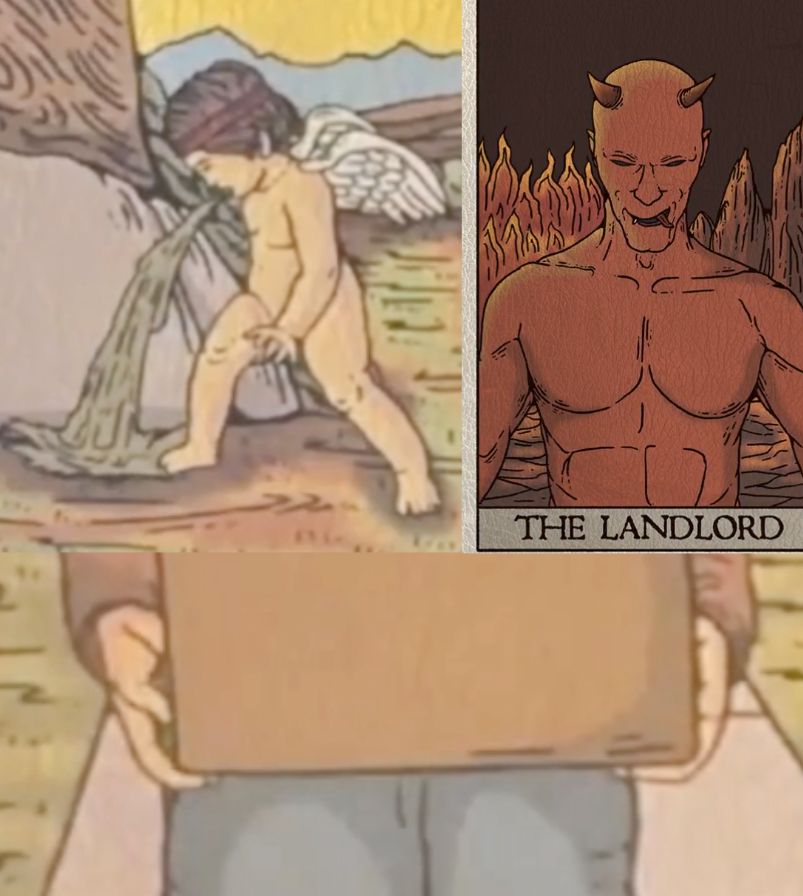

Admittedly, none of the cards shown in the trailer depict a handshake, but they do show human bodies and from the odd grip of the hands in Moving Back In to the leg length mismatch in The Vomiting Cupid, these cards seem to have anatomical issues. The asymmetric details on The Landlord may also speak to this same issue, although I could imagine a human artist making those particular mistakes. The real tell however is that some areas of the image are too precise to have been made by a human hand capable of making such obvious mistakes in others.

Misspellings

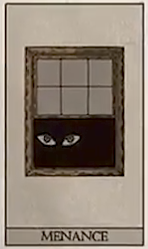

Okay so maybe this is a nitpick, and I only spotted it because another question on Vanishing Inc raised the issue. There is a card shown in a wide shot of the trailer with the word Menance. Menance is not a real word and, paired with the menacing imagery, is clearly meant to say Menace.

AI has a notoriously bad reputation at generating images of text due to the context required for arrangement of specific details in the right order, even more so than with hands, but I don’t think that’s what is at play here.

Rather I think the Menance card is just a typo. Typographical errors are of course easily produced by humans (there are probably one or two in this article) but its presence speaks of a lack of care in production, which is indicative of no visual artist at the helm checking the final proofs.

The Bigger Picture

I have written about AI before, and in that post I expressed some of these concerns already, despite taking a favourable view of chatGPT in terms of its usefulness for generating vaguely readable junk. That said there was a detail I left out of my judgement, something I wasn’t sure if I should share or not, which is the fact that at least one of the writers submitting articles for the monthly Alakazam newsletter uses GPT to generate their column. That same author has also used it extensively to write magic books which are then sold to real people for real money.

When you buy a book you are paying not for paper and ink, but for the human ingenuity that went into the words within. When you buy a set of illustrations you aren’t paying for the printing of them, you probably have your own printer. You’re paying for the skilled human labour to produce the original artwork.

Legally, products made with Stable Diffusion artworks are absolutely 100% fine. But legally, copying that artwork and printing your own is also 100% fine because computer generated art is not copyrightable. With no human holding the pen the image is public domain. This precedent was reinforced by this photo:

Which was a photograph taken by a monkey. Specifically the monkey pressed the button to take the photo and officially that makes it public domain. The photographer who took the camera out there in the first place at great personal expense hates this fact.

The monkey’s name is Naruto.

9/10ths of the law

So as I explained to my magic students just the other day, magic is kind of a law unto itself. If you make copies of DVDs or books that teach magic tricks, thats a copyright issue. But if you teach someone a trick you read in a book, thats not a copyright issue any more than telling your friend the plot of the new Marvel movie is⁵. Indeed unless it is patented (and most aren’t), no magic device is legally beyond replicating. You can copyright a script but not a performance, so if you figure out the methods and change the wording a bit you can pretty much do Penn and Teller’s whole show⁶. Legally speaking you can sell as many knock off Losander tables as you like.

However

Magicians, on the whole have their own code of ethics. A gentlemen’s agreement about not copying routines or tricks, not sharing the methods of published effects, to the point where the “rights” to reprint some tricks are still passed back and forth to producers long after any copyright on them has legally lapsed⁷.

Exposing the method of a magic trick is not a crime in any country on Earth but it is considered one of the highest crimes in magic.

I would have thought that this respect for creative efforts and the value of human ingenuity would carry forward into respecting artists and writers too much to fall back on tools like Stable Diffusion, Midjourney, ChatGPT and Google Bard in order to save a buck in the creation of a premium product where the art is one of its core value propositions.

But apparently not.

So what I’m going to say is unless David Regal can credit the artist, it clearly didn’t have one, and as such feel free to scan, copy, print and sell your own version of this deck. It’s legal and sends a message to magic publishers that they can’t get away with this activity while pretending that magic is a respectable art form. The same goes for any other magic products which make heavy use of AI generated art for the purpose of avoiding the use of actual artists.

It’s what Naruto would have wanted.

¹ Although as you may know and in a plot twist that will crop up several times in this article, the fake faces were applied to nefarious ends by malicious actors. Specifically they were used to lend legitimacy to fake social media profiles acting as sock puppets for swaying political opinions by parroting a false consensus, or just to catfish lonely people on Tinder into sending money to fictional romantic partners who suddenly find themselves in dire straits.

² Okay I guess technically there are incredibly talented artists out there who would be capable of the kind of mind warping illusory images produced by illusion diffusion, certain pieces by Salvador Dali, Rob Gonsalves and M.C. Escher come to mind, but I can think of no way to generate photographic images of this sort without arranging all the elements for real, which for the scale of a landscape or building would render the process prohibitively expensive for any artist who wasn’t already preposterously wealthy and therefore not worried about AI taking their jobs.

³ Fusion Mosaic Phenomenon might be possible with time and effort from highly skilled creatives², but the real time generation of images on the fly used in Subliminal would be completely impossible without the tech.

⁴ Since the main production cost we’re discussing is the commissioning of artwork, the greatest saving made by publishers of the Rider Waite tarot deck is the fact that the artwork is now in the public domain , as both the author A.E. Rider and artist Pamela Colman Smith died more than 70 years ago.

⁵ The baddie flies in from space and shoots a big laser and the goodies stop them using a combination of their powers, ingenuity and friendship. Don’t pretend you have a clue which Marvel movie I saw most recently.

⁶ Except the shadow rose, which Teller actually registered and copyrighted the choreography for. He’s a smart guy.

⁷ This may be changing slightly as the 13 Steps to Mentalism and Tarbell’s Magic Course are both in the public domain, meaning they are both freely available and have both been turned into penguin magic lecture series money spinners. Ironic that legally they could have done this even when the works were still in copyright, they just couldn’t have used the original name.